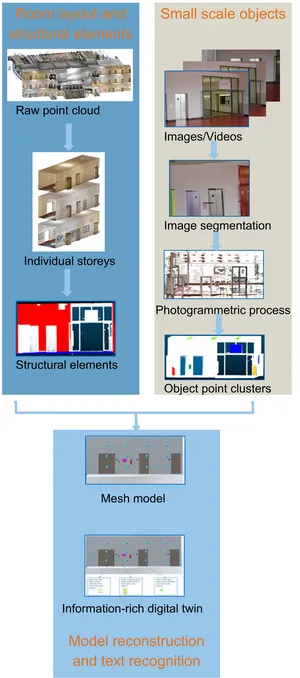

This project is about creating an information-rich digital twin of indoor environments. It is beneficial to have an information-rich digital twin of a building that represents the current state of the asset. However, the process of creating such a digital twin is extremely time-consuming and requires very high human effort, which makes the cost invested in creating a digital twin exceed the potential benefits it can provide. What we want to achieve is to reduce the human effort in this process by proposing methods that apply state-of-the-art deep learning technologies to automate the process.

The overall proposed approach uses laser-scanned point clouds and images as input. Firstly, it starts with segmenting a point cloud of a multi-story building into individual stories. Subsequently, two different approaches are proposed for buildings depending on whether they do or do not fulfill the Manhattan-world assumption. Both approaches use the semantic information extracted by point cloud deep learning. The void-growing method, designed for Manhattan-world buildings, starts with extracting the void spaces inside rooms. Space-bounding elements are then extracted based on the extracted void spaces. For buildings that do not fulfill the Manhattan-world assumption, planes in point clouds are extracted, intersected, and selected by a method based on energy optimization.

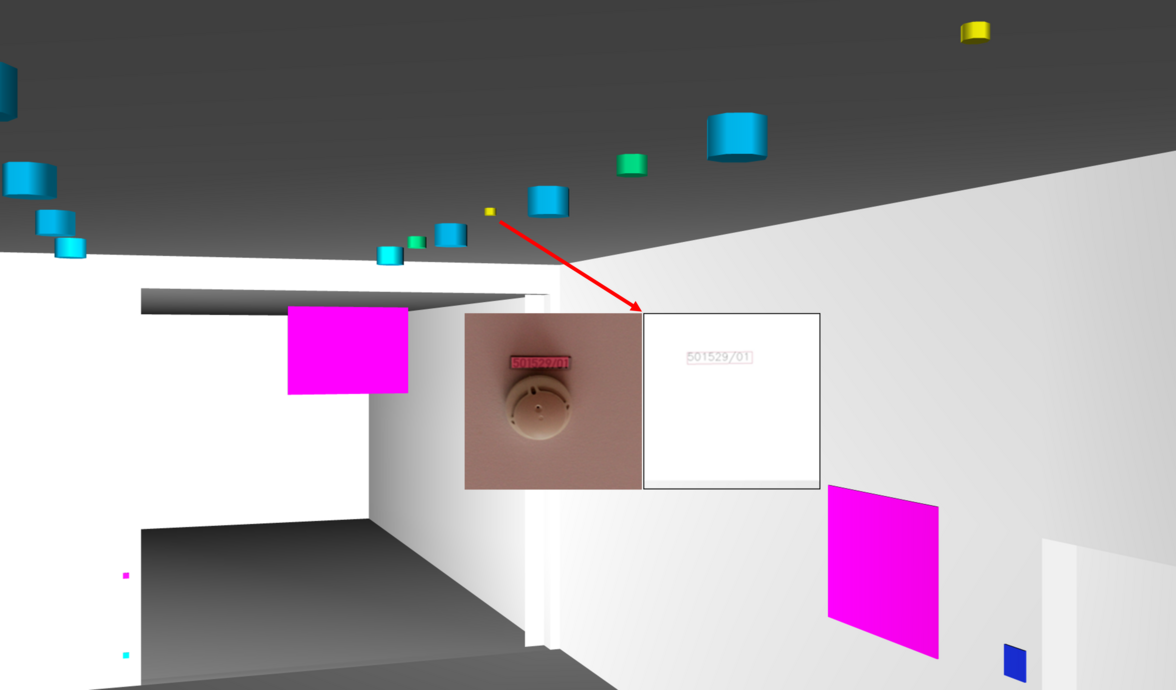

The next step is reconstructing small objects, where images are used to improve the results based on the finding that recognition of small objects in images outperforms that in point clouds. The photos taken by the camera can be registered with laser-scanned point clouds by the photogrammetric process, which means the photogrammetric point cloud works as a bridge to fuse data from different sources. Subsequently, state-of-the-art artificial neural networks of image segmentation are implemented. Then the recognized semantic information in the images is mapped to the laser-scanned point clouds by the camera matrices from the photogrammetric process. Predefined geometric primitives are then fitted to the point clusters with semantic information and added to the digital twin as simplified geometric information. In model enrichment, state-of-the-art deep learning models for text detection and recognition are applied to the images. Text information, such as serial numbers of objects and room numbers, is extracted from images and then mapped to the reconstructed objects in 3D space.